Followup To: [Data Partitioning: How To Repair Explanation]

If I have seen a little further, it is by standing on the shoulders of giants

– Isaac Newton

Table Of Contents

- Context

- The Optimization Level Of Science

- The Fruits Of Science

- Translation Proxy Lag

- Cheap Explanations

- Failure Mode of Meat Science

- Takeaways

Context

Last time, we learned two things:

- Explainers who solely optimize against prediction error are in a state of sin.

- Data partitions immunize abductive processes against overfitting.

Today, we will apply these results to human scientific processes, or to what I will affectionately call meat science.

The Optimization Level Of Science

Nietzsche captures the reputation of science well:

Science is flourishing today and her good conscience is written all over her face, while the level to which all modern philosophy has gradually sunk… philosophy today, invites mistrust and displeasure, if not mockery and pity. It is reduced to “theory of knowledge”… how could such a philosophy dominate? … The scope and the tower-building of the sciences has grown to be enormous, and with this also the probability that the philosopher grows weary while still learning….

Attempts to ground such sentiments in something rigorous exceeds the scope of this post. Today, we simply accept that science is particularly epistemically productive. But let us move beyond the cheerleading, and ask ourselves why this is so.

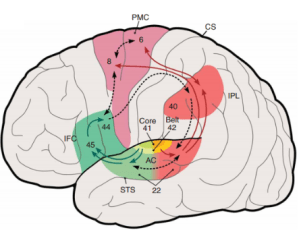

If I were to hand over a map of our specie’s cognitive architecture to an alien species, I would expect them to predict demagogues much more easily than the discovery of the Higg’s Boson. The simple truth is that our minds are flawed: we are born with clumsy inference machinery. How then is epistemic productivity possible?

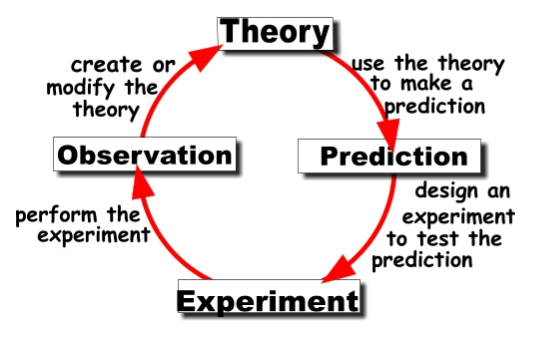

The success of science has been said to derive from the scientific method:

But does the scientific method lend itself to the debiasing of the human animal? I argue it does not. Tribalism in scientific communities, for example, doesn’t seem particularly muted compared to other realms of human experience. Further, in his classic text The Structure of Scientific Revolutions, Thomas Kuhn showed that scientific revolutions emerge from strong, extra-rational motives. In his view, the nature of paradigm shifts is a bit like mystical religious experience: deeply personal, and difficult to verbalize.

I like to imagine science as a socio-historical process. Individuals and even sub-communities within its disciplines may fail to track what is Really There, but communities on the whole tend to move towards this direction. As Max Planck once observed:

A scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die and a new generation grows up that is familiar with it.

The question of how the scientific method facilitates socio-historical truth-tracking is, I believe, unsolved. But all I must do today, is flag the optimization level of science. If science organically debiases our species at the socio-historical level, there is much room for improvement. If cognitive science can forge new debiasing weapons, we will become increasingly able to transcend ourselves, able to move faster than science.

The Fruits Of Science

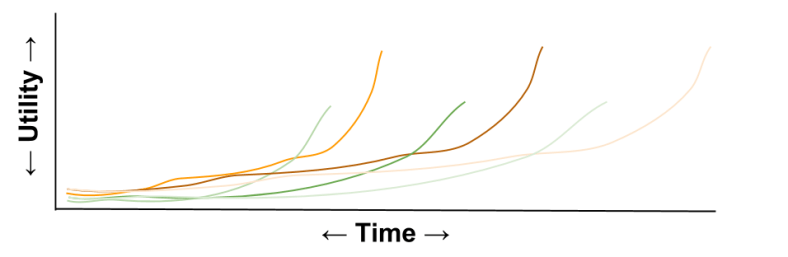

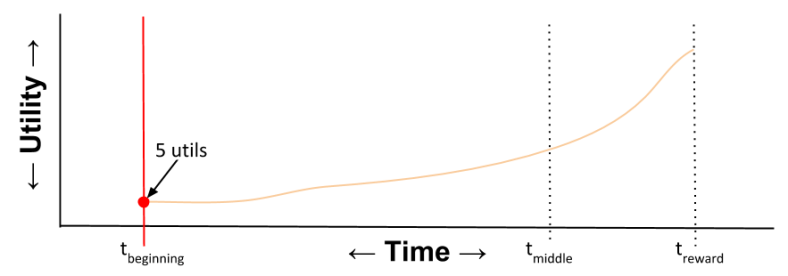

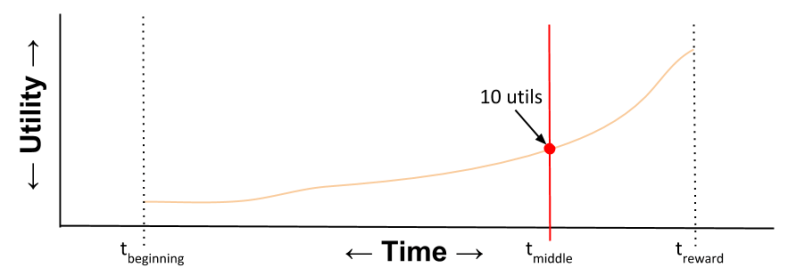

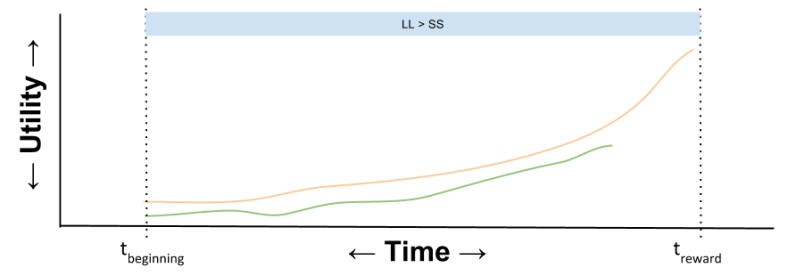

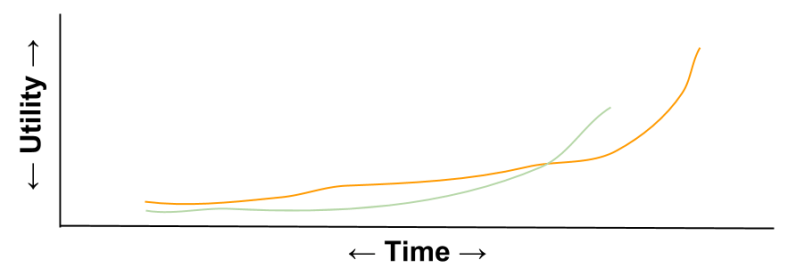

Consider again the following:

This meme is sticky, and signals humility… but it also frames a crisis.

The shoulders keep growing taller.

Because of the accretive nature of science, our collective knowledge far outpaces our cognitive abilities. Even if the controversial Flynn effect is true and our collective IQ really is improving over time, the size of our databases would still outstrip the reach of our cognitive cone.

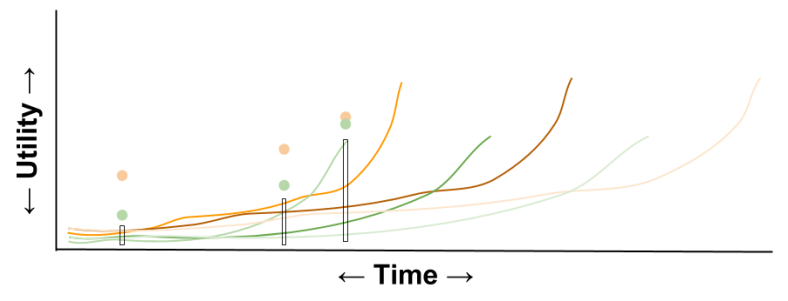

Various technologies have been invented that assuage this crisis. Curriculum compression is one of the older ones. That which was originally available as an anthology of journal articles is compressed into a review article, review articles compressed to graduate courses, graduate courses polished into undergraduate presentations. Consider how Newton would marvel at a present-day course in differential calculus: 300 years of mathematical research, successfully installed into an undergraduate’s semantic memory in a matter of weeks.

Curriculum compression is but one answer to our exploding knowledge base. Other implicit reactions include:

- the narrowing of research trajectories

- the fractionating of disciplines into the Ivory Achipellago

- research crowdsourcing (the de-popularization of the Lone Intellectual Warrior model)

In the years ahead, society seems geared to add two more solutions to our “bag of tricks”:

- the cognitive reform of education technology

- the mechanization of science

And yet, the shoulders keep growing taller…

Translation Proxy Lag

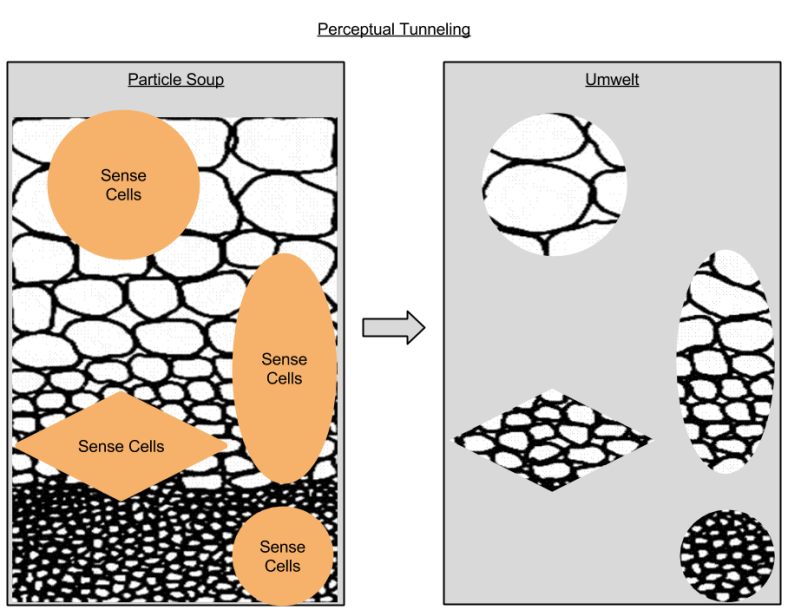

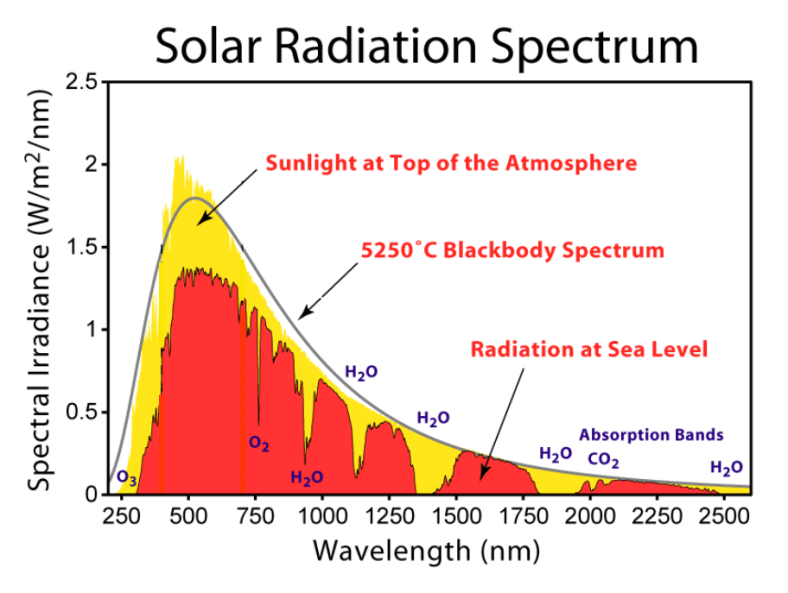

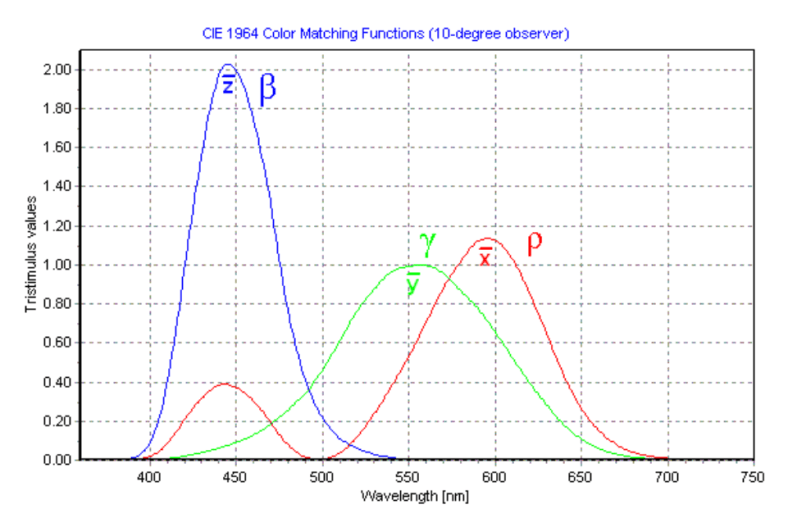

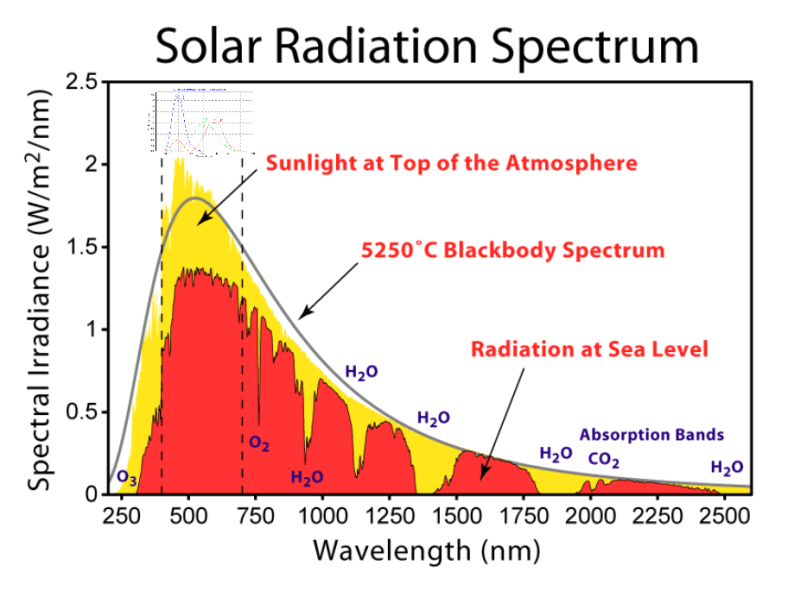

In Knowledge, an Empirical Sketch, I introduced measurement under the title of translation proxies. Why the strange name? To underscore the nature of measurement: forcibly relocating sensory phenomena from its “natural habitat” towards a signature that our bodies are equipped to sense. In this light, a translation proxy can be viewed as a kind of faucet, bringing novel forms of physical reality into the human umwelt.

But consider physics, where the “low-hanging fruit” translation proxies have already been built. You don’t see many physicists clamoring for new hand-held telescopes. Instead, you see them excited over ultra-high precision telescope mirrors, over particle colliders producing energies in the trillions of electronvolts. Such elaborate proxy technologies simply do not flow as quickly from the furnace of invention. Call this phenomenon data intake stagnation.

Not only are our proxy innovations becoming more infrequent, but they are also severely outpaced by our theoreticians. In physics, M theory (the generalization of string theory) posits entities at the 10^-34 m scale, but our current microscopes can only interrogate resolutions around the 10^-15 m scale. In neuroscience, connectome research programs seek to graph the nervous system at the neuronal level (10^-6 m), but most imaging technologies only support the millimeter (10^-3) range. Call this phenomenon translation proxy lag.

It will be decades, perhaps centuries, before our measurement technologies catch up.

Cheap Explanations

Let us bookmark translation proxy lag, and consider a different sort of problem.

Giant shoulders are not merely growing taller. They also render impotent evidence once vital to theoreticians. Let me appeal to a much-cited page from history, to illustrate.

During World War I, Sir Arthur Eddington was Secretary of the Royal Astronomical Society, which meant he was the first to receive a series of letters and papers from Willem de Sitter regarding Einstein’s theory of general relativity. […] He quickly became the chief supporter and expositor of relativity in Britain. […]

After the war, Eddington travelled to the island of Príncipe near Africa to watch the solar eclipse of 29 May 1919. During the eclipse, he took pictures of the stars in the region around the Sun. According to the theory of general relativity, stars with light rays that passed near the Sun would appear to have been slightly shifted because their light had been curved by its gravitational field. This effect is noticeable only during eclipses, since otherwise the Sun’s brightness obscures the affected stars. Eddington showed that Newtonian gravitation could be interpreted to predict half the shift predicted by Einstein.

Eddington’s observations published the next year confirmed Einstein’s theory, and were hailed at the time as a conclusive proof of general relativity over the Newtonian model. The news was reported in newspapers all over the world as a major story:

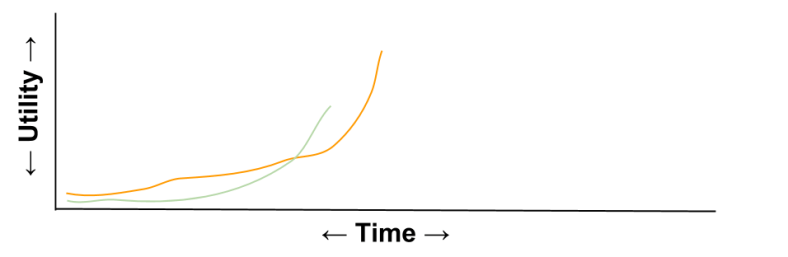

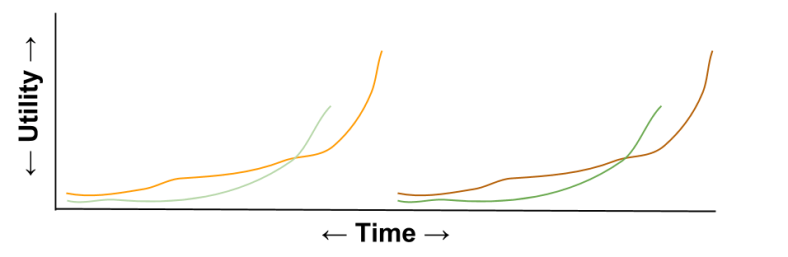

Two competing theories, two perfectly adequate explanations for everyday phenomena, one test to differentiate models. Here we see a curiosity: scientists place a high value on new data. Gravitational lensing constituted powerful confirmation because, as far as the model-creators knew, it could have been the other way.

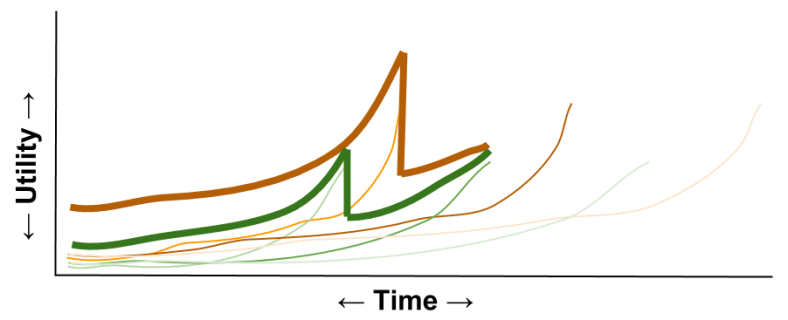

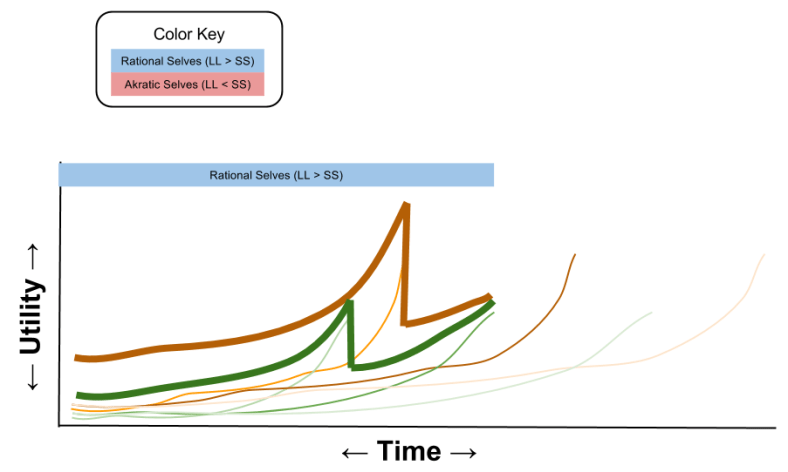

A nice, clean narrative. But consider what happens next. General relativity has begun to show its age: it is chronically incompatible with quantum mechanics. Many successors to general relativity have been created; let us call them Quantum Gravity Theory A, B, and C. Frustratingly, no discrepancies have been found between these theory-universes and our observed-universe.

How are multiple correct options possible? From a computational perspective, the phenomenon of multiple correct answers can be modeled with Solomonoff Induction. A less precise, philosophical precursor of the same morale can be found in underdetermination of theory.

But which theory wins? General relativity won via a solar eclipse generating evidence for gravitational lensing. But gravitational lensing is now “old hat”; so long as all theories accommodate its existence, it no longer wields theory-discriminating power. And – given the translation proxy lag crisis outlined above – it may take some time before our generation acquires its test, its analogue of a solar eclipse.

Can anything be done in the meantime? Is science capable of discriminating between QGT/A, QGT/B, and QGT/C in the absence of a clean novel prediction? When we choose to invest more of our lives in one particular theory above the others, are we doomed to make this choice by mere dint of our aesthetic cognitive modules, by a sense of social belonging, by noise in our yedasentiential signals?

Overfitting: Failure Mode of Meat Science

Bayesian inference teaches us that confidence is usefully modeled as a probabilistic thing. But stochastisity is not equiprobability: discriminability is a virtue. If science cannot provide it, let us cast about for ways to reform science.

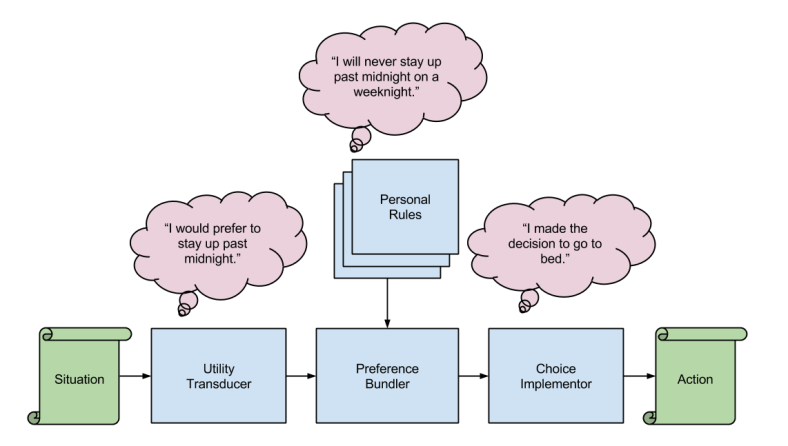

Let us begin our search by considering the rhetoric of explanation. What does it mean for a hypothesis to be criticized as ad-hoc?

> Scientists are often skeptical of theories that rely on frequent, unsupported adjustments to sustain them. This is because, if a theorist so chooses, there is no limit to the number of ad hoc hypotheses that they could add. Thus the theory becomes more and more complex, but is never falsified. This is often at a cost to the theory’s predictive power, however. Ad hoc hypotheses are often characteristic of pseudoscientific subjects.

Do you recall how we motivated data partitioning? Do you yet recognize the stench of overfitting?

In fact, in my view, current scientific practice is a bit too uncomfortable with falsification. Karl Popper lionized falsifiability, and the result of his movement has been an increase in the operationalization and measurement-affinity of the scientific grammar. But the weaving together scientific abstractions and the particle soup, the dawning taboo around Not Even Wrong, came with baggage.

Logically, no number of positive outcomes at the level of experimental testing can confirm a scientific theory, but a single counterexample is logically decisive: it shows the theory, from which the implication is derived, to be false.

But compare this dictum with our result from machine learning, which suggests that perhaps small “falsifications” may be preferable to “getting everything right”:

Explainers who solely optimize against prediction error are in a state of sin.

In sum, we have reason to believe that overfitting is the pervasive illness of our meat science.

Takeaways

- Science tracks truth not at the level of individual, but on a socio-historical scale. There is therefore room to move faster than science.

- Science is accretive: the shoulders keep growing taller.

- Due to constraints in measurement technology, data of a truly novel character has become increasingly difficult to acquire.

- On meat science, as data transitions from “novel” to “known”, it loses its ability to wield theory-discriminating power.

- Given these dual crises, and the philosophical commitments scientific communities have made against falsified hypotheses, science can be shown to suffer from overfitting.

Next time, we will explore applying the machine learning solution to overfitting – data partitioning – to meat science, and motivate the virtue of hiding data from ourselves. See you then!