Part Of: Machine Learning sequence

Followup To: Bias vs Variance, Gradient Descent

Content Summary: 1100 words, 11 min read

In Intro to Gradient Descent, we discussed how loss functions allow optimization methods to locate high-performance models.

But in Bias vs Variance, we discussed how model performance isn’t the only thing that matters. Simplicity promotes generalizability.

One way to enhance simplicity is to receive the model discovered by gradient descent, and manually remove unnecessary parameters.

But we can do better. In order to automate parsimony, we can embed our preference for simplicity into the loss function itself.

But first, we need to quantify our intuitions about complexity.

Formalizing Complexity

Neural networks are often used as classification models against large numbers of images. The complexity of the models tends to correlate with the number of layers. For some models then, complexity is captured in the number of parameters.

While not used much in the industry, polynomial models are pedagogically useful examples of regression models. Here, the degree of the polynomial expresses the complexity of the model: a degree-eight polynomial has more “bumps” than a degree-two polynomial.

Consider, however, the difference between the following regression models

Model A uses five parameters; Model B uses three. But their predictions are, for all practical purposes, identical. Thus, the size of each parameter is also relevant to the question of complexity.

The above approaches rely on the model’s parameters (its “visceral organs”) to define complexity. But it is also possible to rely on the model’s outputs (its “behaviors”) to achieve the same task. Consider again the classification decision boundaries above. We can simply measure the spatial frequency (the “squiggliness” of the boundary) as another proxy towards complexity.

Here, then, are three possible criteria for complexity:

- Number of parameters

- Size of parameters

- Spatial frequency of decision manifold

Thus, operationalizing the definition of “complexity” is surprisingly challenging.

Mechanized Parsimony

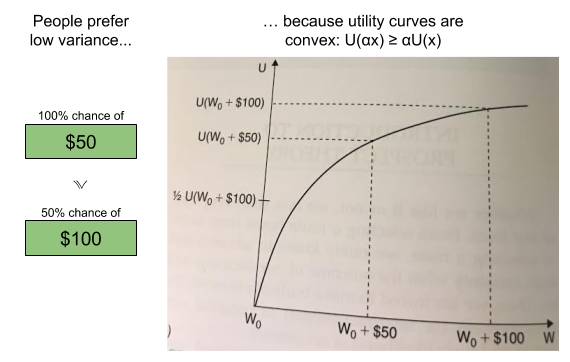

Recall our original notion of the performance-complexity quadrant. By defining our loss function exclusively in terms of the residual error, gradient descent learns to prefer accurate models (to “move upward”). Is there a way to induce leftward movement as well?

To have gradient descent respond to both criteria, we can embed them into the loss function. One simple way to accomplish this: addition.

This technique is an example of regularization.

Depending on the application, sometimes the errors are much larger than the parameters or vice versa. In order to assure the right balance between these terms, people usually add a hyperparameter to the regularized loss function

A Geometric Interpretation

Recall Einstein’s insight that gravity is curvature of spacetime. You can envision such curvature as a ball pulling on a sheet. Here is the gravity well of bodies of the solar system:

Every mass pulls on every other mass! Despite the appearance of the above, Earth does “pull on” Saturn.

The unregularized cost function we saw last time creates a convex loss function, which we’ll interpret as a gravity well centered around parameters of best fit. If we replace J with a function that only penalizes complexity, a corresponding gravity well appears, centered around parameters of zero size.

If we keep both terms, we see the loss surface now has two enmeshed gravity wells. If scaled appropriately, the “zero attractor” will pull the most performant solution (here towards a not-much-worse yet simpler model

.

More on L1 vs L2

Previously, I introduced the L1 norm aka mean average error MAE

Another loss function is the L2 norm aka root mean squared error RMSE

The L1 and L2 norms respectively correspond to Euclidean vs Manhattan distance (roughly, plane vs car travel):

One useful way to view norms is by their isosurface. If you can travel in any direction for a finite amount of time, the isosurface is the frontier you might sketch.

The L2 isosurface is a circle. The L1 isosurface is a diamond.

- If you don’t change direction, you can travel the “normal” L2 distance.

- If you do change direction, your travel becomes inefficient (since “diagonal” travel along the hypotenuse is forbidden).

The Lp Norm as Superellipse

Consider again the formulae for the L1 and L2 norm. We can generalize these as special cases of the Lp norm:

Here are isosurfaces of six exemplars of this norm family:

On inspection, the above image looks like a square that’s inflating with increasing p. In fact, the Lp norm generates a superellipse.

As an aside, note that the boundaries of the Lp norm family operationalize complexity rather “intuitively”. For the L0 norm, complexity is the number of non-zero parameters. For the Linf norm, complexity is the size of the largest parameter.

Lasso vs Ridge Regression

Why the detour into geometry?

Well, so far, we’ve expressed regularization as But most engineers choose between the L1 and L2 norms. The L1 norm is not convex (bowl shaped), which tends to make gradient descent more difficult. But the L1 norm is also more robust to outliers, and has other benefits.

Here are two options for the residual norm:

: sensitive to outliers, but a stable solution

: robust to outlier, but an unstable solution

The instability of tends to be particularly thorny in practice, so $latex \|e\|_2$ is almost always chosen.

That leaves us with two remaining choices:

- Ridge Regression:

: computationally inefficient, but sparse output.

- Lasso Regression:

: computationally efficient, non-sparse output

What does sparse output mean? For a given model type, say with parameters

, Ridge regression might output parameters

whereas Lasso might give me

. In effect, Ridge regression is performing feature selection: locating parameters that can be safely removed. Why should this be?

Geometry to the rescue!

In ridge regression, both gravity wells have convex isosurfaces. Their compromises are reached anywhere in the loss surface. In lasso regression, the diamond-shaped complexity isosurface tends to push compromises towards axes where . (In higher dimensions, the same geometry applies).

Both Ridge and Lasso regression are used in practice. The details of your application should influence your choice. I’ll also note in passing that “compromise algorithms” like Elastic Net exist, that tries to capture the best parts of either algorithm.

Takeaways

I hope you enjoyed this whirlwind tour of regularization. For a more detailed look at ridge vs lasso, I recommend reading this.

Until next time.

These are in fact two weight functions:

These are in fact two weight functions: